Intermittently Failing Tests In The Embedded Systems Domain

Pre-print/PDF here [1] or from arXiv here: [2]

Informal Summary

In this paper we investigate flaky tests. But since a lot of the previous work assumes that testing is almost free and they can re-test over and over again, we need a new term. In our context we change software and testware almost every day, so we call these tests intermittently failing, and allow software, testware and hardware to change over time.

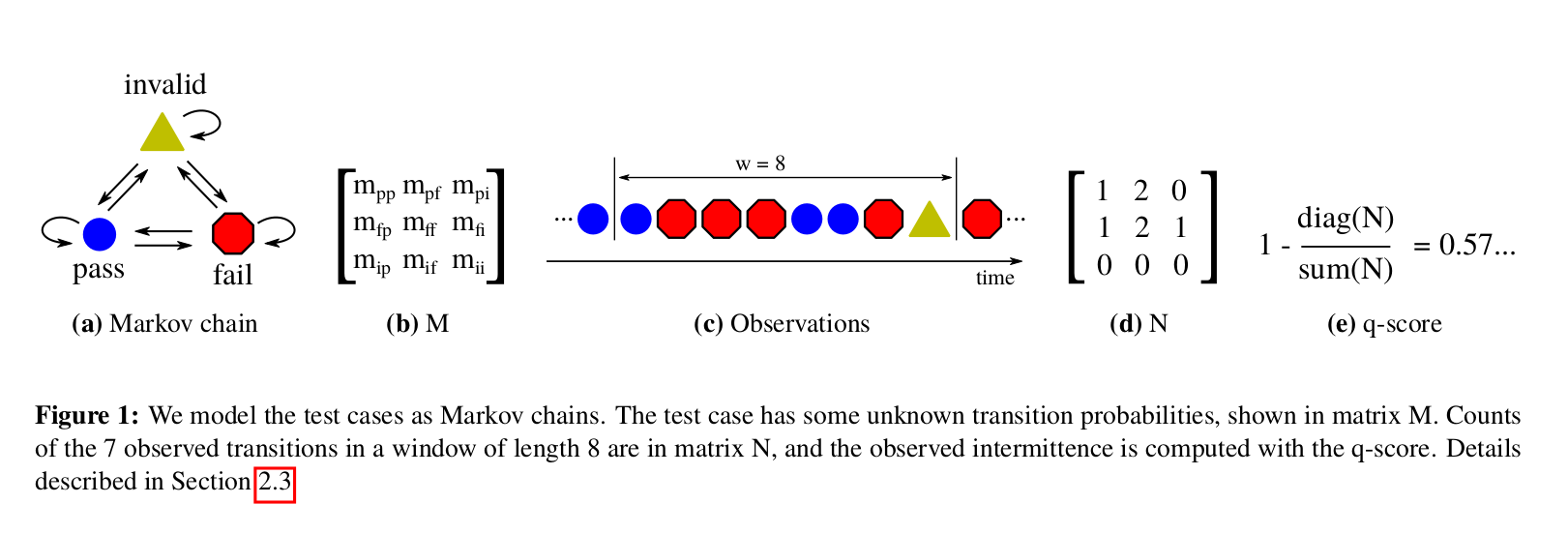

We look at test cases as if they were Markov chains and look at how often they change state by defining q-score at the observed likelihood of changing verdict in a window of verdicts:

Using q-score we filtered 9 months of nightly testing data from more than 5200 test cases and more than half a million verdicts, and identified 230 test cases that were either intermittent or consistent. In these we dug deeper and with related work we identified nine factors for intermittently failing tests:

- test case assumptions

- complexity of testing

- software or hardware faults

- test case dependencies

- resource leaks

- network issues

- random number issues

- test system issues

- code maintenance

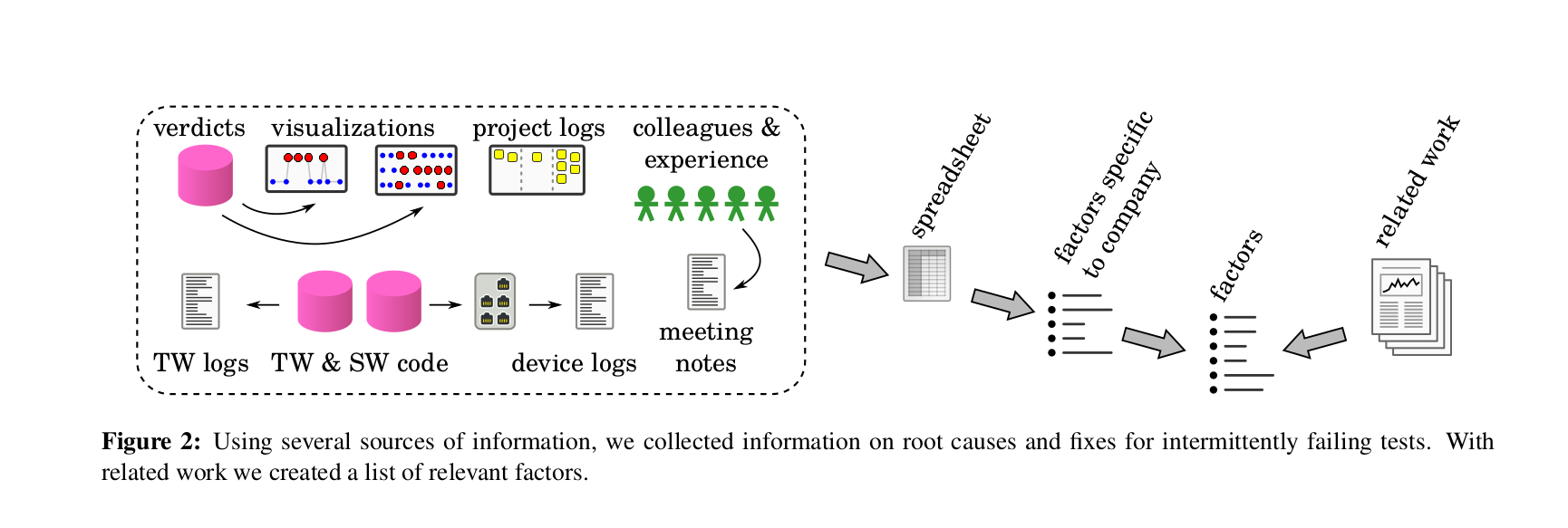

The data analysis took a lot of time, and I ended up having to look in a number of data sources (including people). Here's an overview of the sources.

Abstract

Software testing is sometimes plagued with intermittently failing tests and finding the root causes of such failing tests is often difficult. This problem has been widely studied at the unit testing level for open source software, but there has been far less investigation at the system test level, particularly the testing of industrial embedded systems. This paper describes our investigation of the root causes of intermittently failing tests in the embedded systems domain, with the goal of better understanding, explaining and categorizing the underlying faults. The subject of our investigation is a currently-running industrial embedded system, along with the system level testing that was performed. We devised and used a novel metric for classifying test cases as intermittent. From more than a half million test verdicts, we identified intermittently and consistently failing tests, and identified their root causes using multiple sources. We found that about 1-3% of all test cases were intermittently failing. From analysis of the case study results and related work, we identified nine factors associated with test case intermittence. We found that a fix for a consistently failing test typically removed a larger number of failures detected by other tests than a fix for an intermittent test. We also found that more effort was usually needed to identify fixes for intermittent tests than for consistent tests. An overlap between root causes leading to intermittent and consistent tests was identified. Many root causes of intermittence are the same in industrial embedded systems and open source software. However, when comparing unit testing to system level testing, especially for embedded systems, we observed that the test environment itself is often the cause of intermittence.

Cite as

@inproceedings{strandberg2020,

author = {Per Erik Strandberg and Thomas Ostrand and Elaine Weyuker and Wasif Afzal and Daniel Sundmark},

title = {Intermittently Failing Tests in the Embedded Systems Domain},

month = {July},

year = {2020},

booktitle = {International Symposium on Software Testing and Analysis}

}

Page belongs to Kategori Publikationer