Information Flow In Software Testing

This paper is published with open access so you can download it from IEEE here: [1], from MDH here: [2], or from my webpage: [3]

A video presentation is available at youtube: [4]

Abstract

Background: In order to make informed decisions, software engineering practitioners need information from testing. However, with the trend of increased automation, there is an exponential growth and increased distribution of this information. This paper aims at exploring the information flow in software testing in the domain of embedded systems.

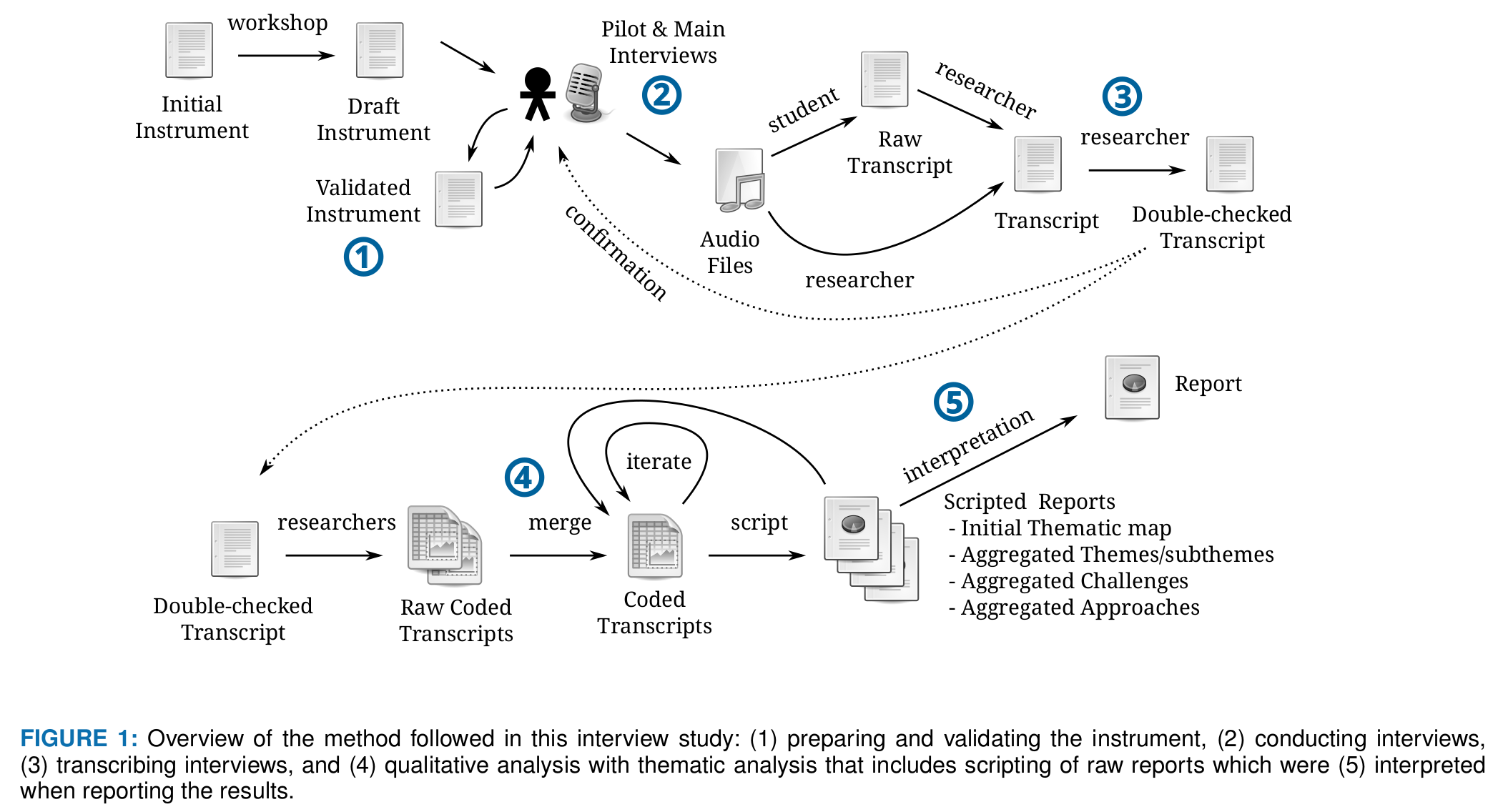

Method: Data was collected through semi-structured interviews of twelve experienced practitioners with an average work experience of more than fourteen years working at five organizations in the embedded software industry in Sweden. Seventeen hours of audio recordings were transcribed and anonymized into 130 pages of text that was analyzed by means of thematic analysis.

Results: The flow of information in software testing can be represented as feedback loops involving stakeholders, software artifacts, test equipment, and test results. The six themes that affect the information flow are: how organizations conduct testing and trouble shooting, communication, processes, technology, artifacts, and the organization of the company. Seven main challenges for the flow of information in software testing are: comprehending the objectives and details of testing, root cause identification, poor feedback, postponed testing, poor artifacts and traceability, poor tools and test infrastructure, and distances. Finally, five proposed approaches for enhancing the flow are: close collaboration between roles, fast feedback, custom test report automation, test results visualization, and the use of suitable tools and frameworks.

Conclusions: The results indicate that there are many opportunities to improve the flow of information in software testing: a first mitigation step is to better understand the challenges and approaches. Future work is needed to realize this in practice, for example on how to shorten feedback cycles between roles, as well how to enhance exploration and visualization of test results.

Informal Summary

The very first thing I wanted to do as a PhD-student was to interview people at other companies on how they make sense of test results. At Westermo this is a non-trivial process, we don't always select all tests for nightly testing (as you can read in Experience Report Suite Builder), soon we won't even use the same devices for the same test case in consecutive nights (as you can read in Automated Test Mapping And Coverage For Network Topologies), and making a summary/visualization is also non-trivial (see Decision Making And Visualizations Based On Test Results). So I wanted to know how other companies solve these problems.

Figure: Overall method.

We made a questionnaire (available at Zenodo [5], or here: [6]) and recruited interviewees at five different companies. We're really happy out the scientific methods used -- I learned a lot about qualitative data analysis from this study.

During the work of this paper we had many ethical concerns and anonymized data as much as we could. This lead to a follow-up paper on Ethical Interviews In Software Engineering.

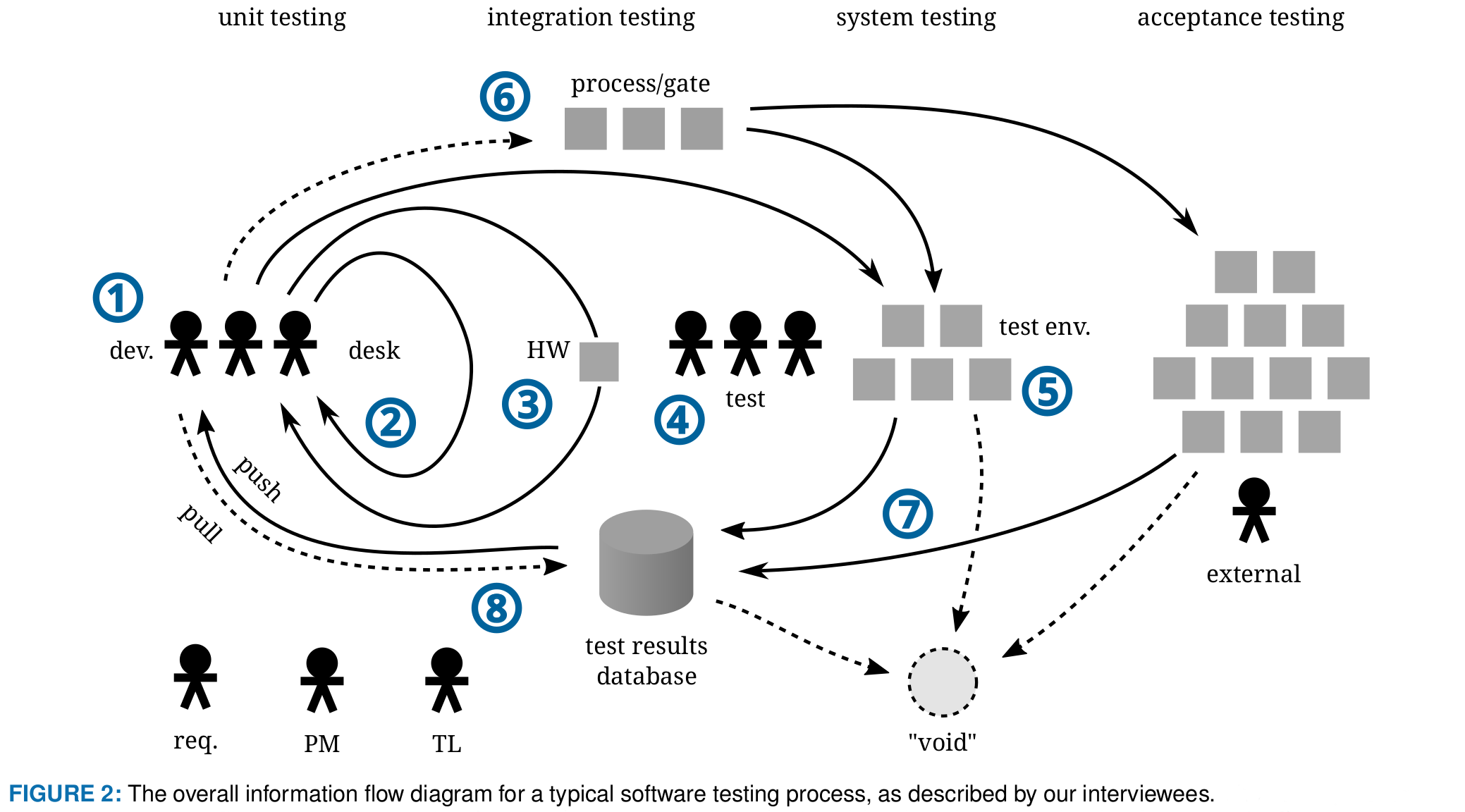

Figure: Overall results.

The most important result from this study is that the information flow can be modeled as a set of feedback loops, starting with the developers and ending with information on test results going back to the developers. The development team (1) produces new software, or software with changes. This is tested in a short and fast loop locally at the developers desk (2), or using some special hardware in a slower feedback loop (3). If the software is tested in a test environment (5) then there might be gates (6) slowing this process down and the need for a dedicated test team (4). Testing produces test results in a test results database (7). Sometimes the test results are pushed (8) back to the developers, sometimes they need to pull to get access to it (again: 8), and oftentimes the test results is never used ("void"). Peripheral, but important, roles are requirements engineers (req.), project managers (PM), and test leads (TL).

We found five main themes with an impact on these feedback loops: how companies do testing and trouble shooting, their communication patterns, processes, technology, artifacts and their organizational structure.

Based on the interviews we make the following recommendations to practitioners with respect to challenges in the information flow:

- Details of testing: Store all details from the test execution, allow searching, filtering and links between systems.

- Root cause identification: Make recommendations for where to start fault finding when tests fail.

- Poor feedback: Speed up feedback loops by testing without hardware when possible. Allow filtering of logs.

- Postpone testing: Test without hardware when possible, allow informal/exploratory testing.

- Poor artifacts, poor traceability: Specify requirements on a suitable level. Good traceability allows removal of test scope.

- Poor tools, poor test infrastructure: When adding a new tool, take interoperability with existing tools into account.

- Distances: Short distances improve information flow. Some distances can be mitigated with tools.

We also make recommendations for good approaches:

- Close collaboration between roles: Encourage collaboration, e.g. tester/req. engineer “pair programming” of requirements.

- Fast feedback: Test without hardware when possible, allow informal/exploratory testing.

- Custom test report automation: A test results database can enable automation of test reporting.

- Test result visualization: Visualizations require a test results database, as well as resources for development and maintenance.

- Tools and frameworks: Use suitable tools, make sure they can be integrated and/or support linking.

Cite

@article{strandberg2019flow,

author={P. E. Strandberg and E. P. Enoiu and W. Afzal and D. Sundmark and R. Feldt.},

journal={IEEE Access},

title={Information Flow in Software Testing -- An Interview Study with Embedded Software Engineering Practitioners},

year={2019},

volume={7},

number={},

pages={46434-46453},

doi={10.1109/ACCESS.2019.2909093},

ISSN={2169-3536},

month={},

}

Informal style:

- P. E. Strandberg, E. P. Enoiu, W. Afzal, D. Sundmark and R. Feldt, "Information Flow in Software Testing -- An Interview Study With Embedded Software Engineering Practitioners," in IEEE Access, vol. 7, pp. 46434-46453, 2019

This page belongs in Kategori Publikationer